PWN Rome: Artificial Intelligence and Gender Equality

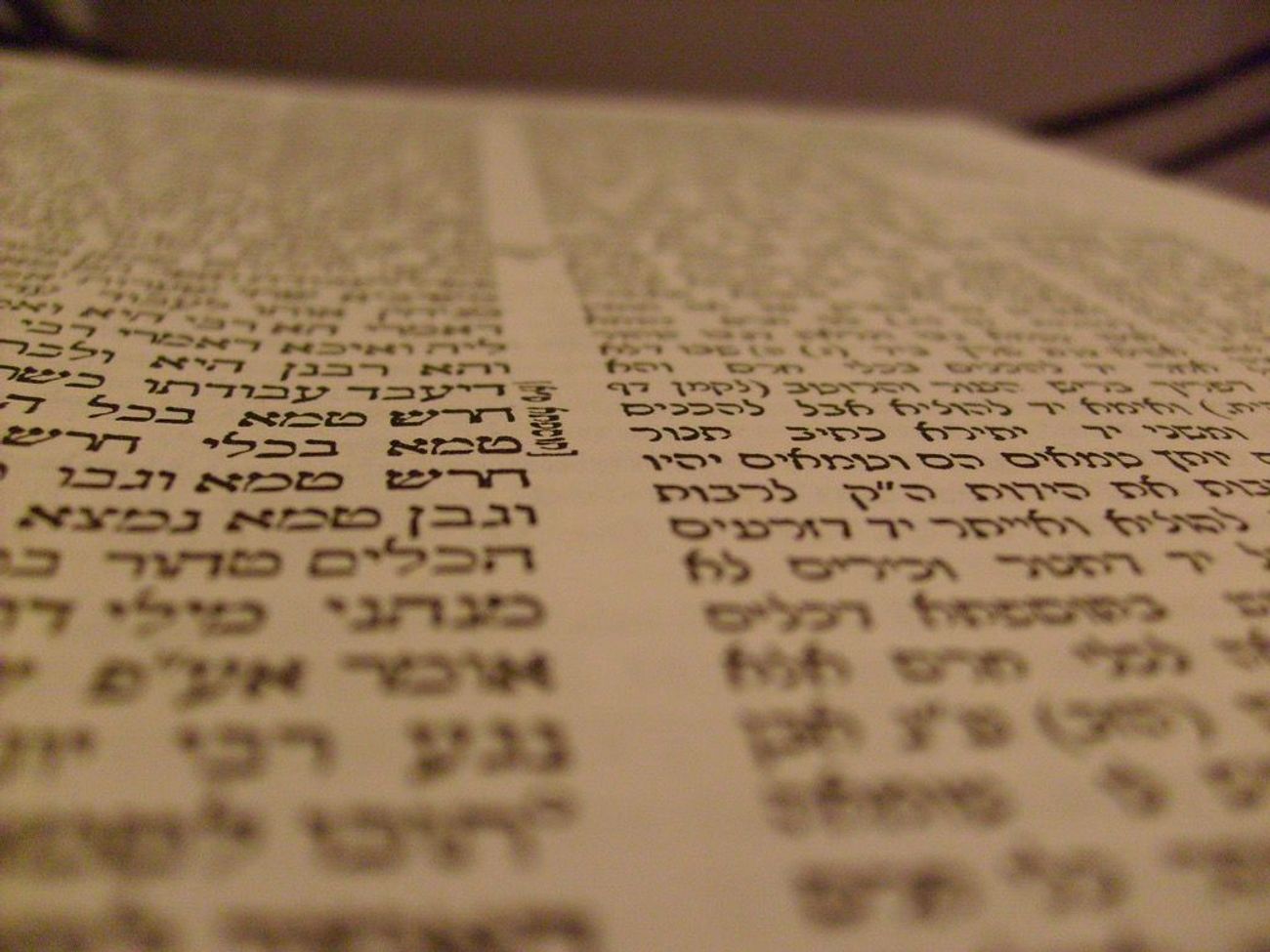

On 14th of June the European Parliament adopted its negotiating position on the Artificial Intelligence (AI) Act. The rules would ensure that AI developed and used in Europe is fully in line with EU rights and values including human oversight, safety, privacy, transparency, non-discrimination, and social and environmental wellbeing.  Clelia Piperno is a PWN Rome member, CEO of the Babylonian Talmud Translation Project and an academic, passionate about innovation and AI. Clelia wanted to share with the PWN Global Community some thoughts about AI and how it can affect the fundamental rights of non-discrimination. Here are her thoughtful insights on the subject. If you'd like to continue the conversation with Clelia, we encourage you to connect with her via the PWN Member platform, or via her LinkedIn profile at the bottom of the article: Clelia Piperno is a PWN Rome member, CEO of the Babylonian Talmud Translation Project and an academic, passionate about innovation and AI. Clelia wanted to share with the PWN Global Community some thoughts about AI and how it can affect the fundamental rights of non-discrimination. Here are her thoughtful insights on the subject. If you'd like to continue the conversation with Clelia, we encourage you to connect with her via the PWN Member platform, or via her LinkedIn profile at the bottom of the article:

The EU AI Act reflects the same approach already adopted by the Commission on other issues, such as medical device software. In the context of medical device software, it made sense, because it was a regulatory intervention in a unique context. Yes, when we move into the broader world of AI, we are dealing with a global system that takes data in a general and generic way, and therefore needs stricter regulation.

Regulations should foresee risk classes designed to guarantee levels of reliability and safety through a system of checks and compliance and certification procedures ex ante, not ex post. Intervening ex post on a piece of, fake news, for example, runs the risk of making it go viral (if it hadn't already done so!).

Only in rare cases do algorithms make explicitly discriminatory decisions (so-called direct discrimination) based on their predictions on prohibited characteristics, such as race, ethnicity, or gender. More often we have cases of indirect discrimination, i.e., a disproportionate and unjustified unfavorable impact on individuals belonging to certain groups. This can happen because the system mimics previous decisions, including any discriminatory decisions. In a common law context, it's better not to imagine what could happen within a judgment involving an ethnic context. Think of what could happen if the subject were violence against a woman is terrible or think of past situations where women did not have access to certain roles. Sit with that for a minute.....uncomfortable isn't it?

The system gives weight to characteristics that, while not critical in themselves, serve as indicators of protected characteristics, like gender or ethnicity. For example, the postal code can serve as a proxy for ethnicity, because in some cities certain neighborhoods are predominantly inhabited by individual ethnic groups. In essence, AI recognizes and finds patterns and correlations in data that we don't immediately and conciously think about.

The problem is that if we try to equalize favorable and unfavorable errors for the two groups, the system will make more mistakes and we risk discriminating against individual individuals. So, two people with the same characteristics would be evaluated differently.

Situations like this, i.e., a different base rate, is often endemic to protected groups. For this reason, it is not just prudent, but essential, to take time to explain the reasons for decisions based on machine learning to human decision-makers, and combine old evaluation techniques with new ones.

Even without reconstructing the logical path of a system, identifying the factors that influence the decision is still, at least in part, possible for researchers. One method involves modifying, one by one, all the characteristics used by the system, and seeing which influences positively or negatively on the result.

The AI Act puts the good will of the family futher back into the hands of manufacturers, i.e., it asks them not to produce algorithms "intended to distort human behavior", the sale of which is prohibited. But it is enough to produce one that is adequate, but ready to be put into the hands of an operator who can manipulate it to produce malware.

The regulatory threshold needs to be raised and must be produced in places where women are adequately represented, imagining certifications with very significant penalties in case of violation. But above all, a process of global digital literacy needs to be initiated, which is the only real tool that will allow conscious use of AI.

Paraphrasing Don Milani, "We can affirm that the difference between who will discriminate, and who will not, is simply a thousand pieces of digital information".

About Clelia Piperno

CEO of the Babylonian Talmud Translation Project. She is a lawyer, academic. She graduated in Political Science and was a student of Prof. Giuliano Amato. In 2016 she was named Woman of the Year by the online magazine "Lilith - Independent, Jewish and Frankly Feminist."

Over the course of her career, she has acted as consultant for several Italian public Institutions such as the Ministry of Foreign Affairs, the Ministry of Justice, the Presidency of the Council of Ministers, ANCI - as an expert of the Presidency of the Council of Ministers' Commission for the management of funds for the reconstruction of the Balkans - of the European Parliament and the European Commission.

She is the creator and CEO of the Babylonian Talmud Translation Project, financed with 12 million euro by the Ministry of Education, Universities and Research in collaboration with the National Research Council and the Union of Italian Jewish Communities. She is the creator and CEO of the Babylonian Talmud Translation Project, financed with 12 million euro by the Ministry of Education, Universities and Research in collaboration with the National Research Council and the Union of Italian Jewish Communities.

Date: July 2023

Author: Clelia Piperno, PWN Rome and CEO of the Babylonian Talmud Translation Project

Copy Editor: Rebecca Fountain, Marketing Consultant, PWN Global

|